Quantum computing certainly sounds like something from the future, not the present. Quantum mechanics itself is a topic of science that baffles even the most sharp-minded physicists.

Nevertheless, a group of researchers at the University of New South Wales in Sydney, Australia, have clearly understood it enough to achieve something incredible: For the first time, they have demonstrated that basic quantum computation using silicon is viable, paving the way for ultra-fast computer systems. The study is published in Nature.

Let’s take a step back here. Our world thrives on computing power, and each year our thirst for more computational power increases exponentially. The famous Moore’s Law states that the number of transistors – roughly analogous to computing power – on a microchip doubles every 18-24 months. Of course, you cannot just keep adding transistors to microchips; eventually, you will run out of space. Unless you shrink the microchips each time, that is.

These days, microchips and transistors are measured in extremely small units: millionths of a millimeter, or nanometers (in one inch, there are 25 million nanometers). As reported in The Economist, even in 2002, a single U.S. dollar would buy you 2.6 million transistors with features only 180 nanometers (approximately a millionth of an inch) in size. Today, for the same price, you could net 19 million transistors with features no larger than 16 nanometers in size.

Microchips cannot keep shrinking in size in this way, though. Using standard materials, and following the same pattern of increasingly powerful processing power and increasingly smaller dimensions over time, eventually these microchips will reach the size of an atom… and we can’t make a microchip smaller than an atom, right?

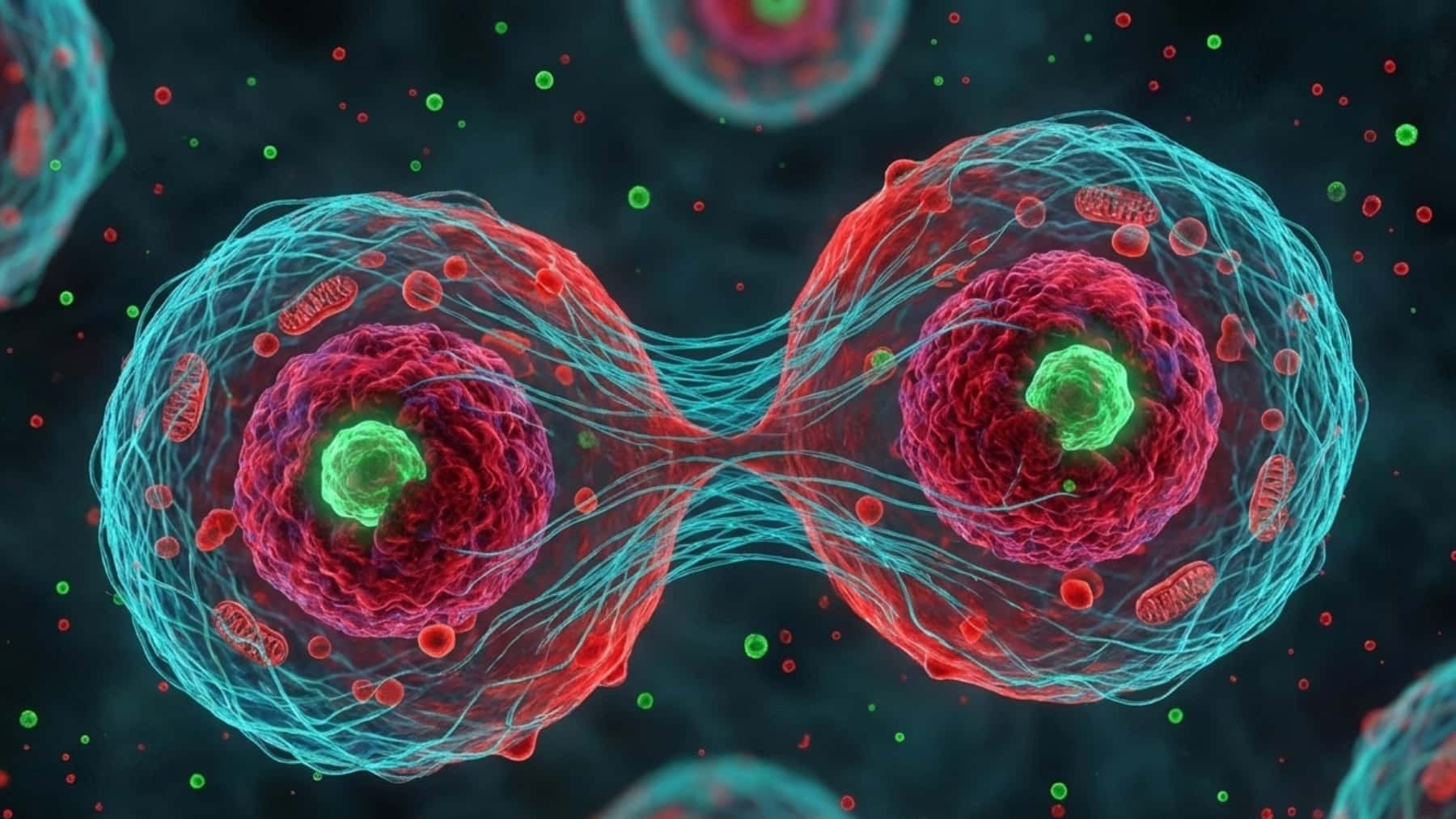

Wrong. This is where quantum computing comes in. Ordinary digital computers communicate and store information in bits using binary language: The smallest pieces of information are represented by either 1 or 0. Extremely long strings of 1s and 0s form data streams that computers are able to use. In quantum computing, bits are replaced by qubits.

Qubits take advantage of the quirky fact that a particle can exist in two physical states simultaneously, also known as superpositioning. The two possible states of the particle are combined (superimposed) to result in a separate state that can be detected by an instrument. In the same way, a qubit can exist in the standard binary states of 1 and 0, or it can exist as a superposition of the two: It will be both 0 and 1 simultaneously.

So, instead of a standard computer, which can only store information in two separate states, a quantum computer can store information across multiple states at the same time, allowing it to perform millions more calculations per second than any other computer.

Although quantum computing has been demonstrated to be viable before using cooled superconductors, as reported in a separate study in Science, this is a vastly expensive process to maintain compared to this newer setup, which uses more easily accessible silicon materials.

If a fully operational quantum computer develops from this recent study, it is not an understatement to say that it will revolutionize the world. Medical research, complex physics simulations, monitoring and processing crime, weather and financial patterns, and data encryption techniques will all be dramatically enhanced the day digital computing is replaced by quantum computing.

www.iflscience.com